Re-Creating the Barkla HPC Cluster in the Cloud during a Complete HPC Systems Relocation

One of the most exciting projects we undertook in 2019 was the move of the University of Liverpool’s HPC Cluster, Barkla, into its new home. What made this move even more exciting was that while Barkla was being settled into its new datacenter, it was business as usual for Barkla’s users on the cloud.

The Advanced Research Computing Facilities at the University of Liverpool have been pioneers in cloud HPC since taking the decision to bring cloud into their portfolio in 2017. They were also one of our first clients to embrace working collaboratively through managed services in order for their team to move into roles suited for user innovation. This meant that when it came time for the Barkla cluster to relocate to a new data-centre, the team on the ground at the University of Liverpool was best placed to map out the features most critical to the system. Creating a “cloud Barkla” was a project undertaken not only to ensure that users had uninterrupted service during the scheduled two-week downtime, but also to look at critical requirements such as data access, disaster recovery, and cloud bursting.

The calm before the storm.

In order to plan the migration we needed to arrange a trial run of the system design, which we were able to schedule during a planned system shutdown. This four and a half day period would give first-hand insight into how users would engage with a cloud version of Barkla, and provide important information on how the longer fourteen-day outage would possibly play out.

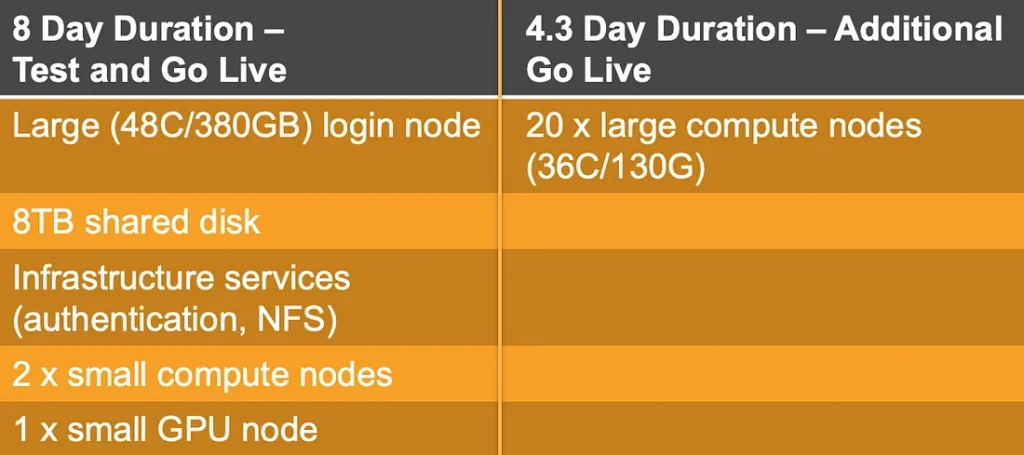

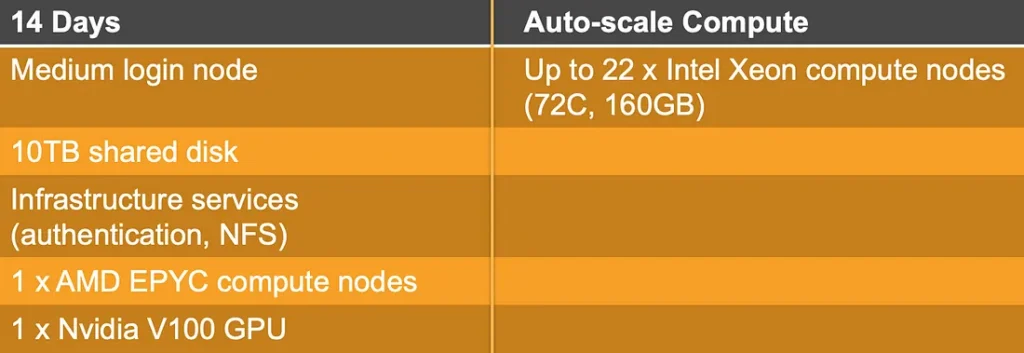

We started in a pre-Go Live environment, establishing login node, shared disk and infrastructure systems, as well as establishing permanent compute nodes that would run the full duration of our project (eight days total), and a secondary set of compute nodes that would run during the four and a half days of shutdown.

Our biggest lesson: Removing user barriers

The biggest takeaway from our test was that we needed to ensure that users felt no barrier in working in the cloud. The test incorporated the use of SSH keys, something that was considered foreign to the the user base who preferred single-sign-on passwords. Our path forward was to create a Gateway Appliance to provide encrypted network connectivity between clusters. This very small unit was able to be housed in a secondary data-centre, providing the familiar single-sign-on capability for the cloud Barkla cluster. This very small change — as well as a revision of cluster requirements in order to achieve better budget efficiency through right sizing and autoscaling, led us into the Go Live date with confidence.

Our first success with testing led us to push the boundaries a bit further with the Go Live of cloud Barkla. It was a secondary requirement for the University of Liverpool to be able to work across clouds and during this project we were able to harness the capabilities on their preferred cloud platforms of AWS and Microsoft Azure. This multi-cloud approach hooked into their 2020 aims of looking at establishing a proper system of disaster recovery and cloud bursting, and verifying that their mission critical system could operate on both platforms was key to green-lighting the research.

Thanks to the team at Liverpool Advanced Research Computing Facilities we were also able to gain a deeper insight into data storage and recovery. This knowledge was not only important to this team, but is a significant challenge across the wider HPC Community. In the future, understanding data access and quality metrics across multiple platforms will become increasingly important, particularly as data-sets are increasing in both size and complexity. The opportunity to prototype solutions to these issues in a time-banded project will serve the team well as they move into 2020 projects and goals.

How it was done.

This project was delivered via the Alces Flight Center subscription held by the University of Liverpool. Resource utilised included software from the OpenFlightHPC project, the bare-metal barkla cluster (a Dell EMC hardware platform), and the AWS and Microsoft Azure cloud platforms. Collaboration teams were Alces Flight and the Advanced Research Computing Facilities at the University of Liverpool. If you would like to know more about how we make HPC happen feel free to contact us.